Abstract

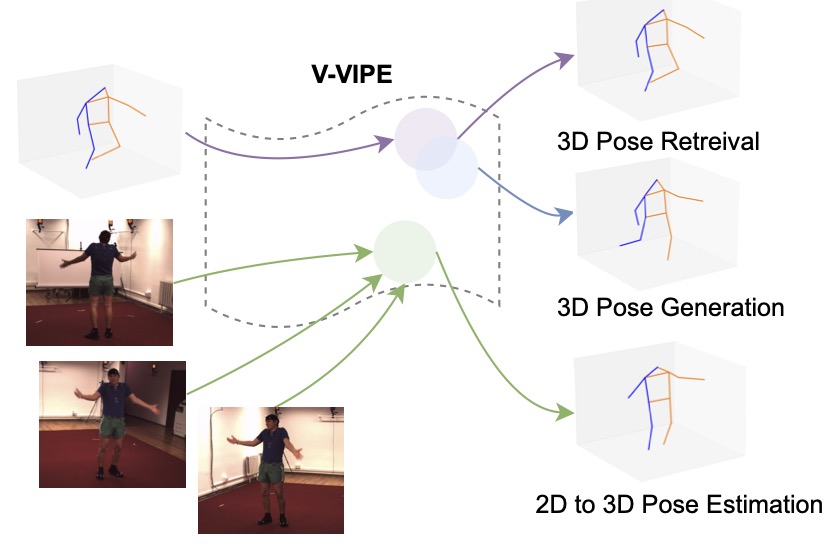

Learning to represent three dimensional (3D) human pose given a two dimensional (2D) image of a person, is a challenging problem. In order to make the problem less ambiguous it has become common practice to estimate 3D pose in the camera coordinate space. However, this makes the task of comparing two 3D poses difficult. In this paper, we address this challenge by separating the problem of estimating 3D pose from 2D images into two steps. We use a variational autoencoder (VAE) to find an embedding that represents 3D poses in canonical coordinate space. We refer to this embedding as variational view-invariant pose embedding (V-VIPE). Using V-VIPE we can encode 2D and 3D poses and use the embedding for downstream tasks, like retrieval and classification. We can estimate 3D poses from these embeddings using the decoder as well as generate unseen 3D poses. The variability of our encoding allows it to generalize well to unseen camera views when mapping from 2D space. To the best of our knowledge, V-VIPE is the only representation to offer this diversity of applications.

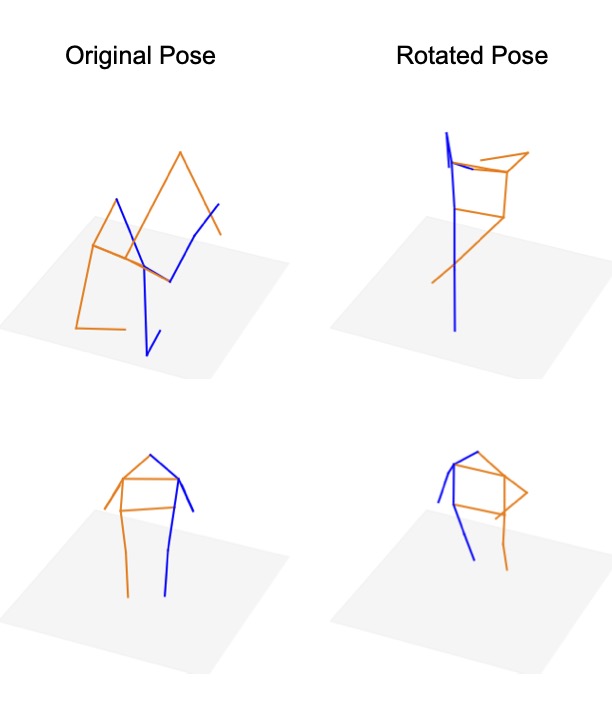

Data Processing

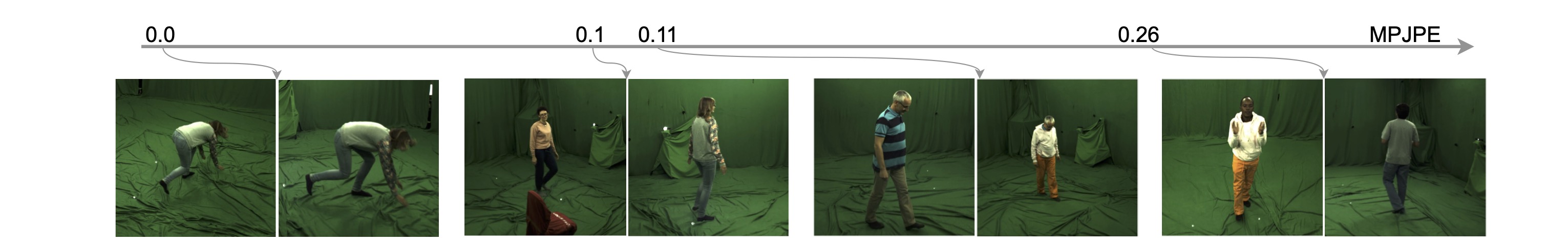

In typical 3D pose estimation work the pose is found in camera space. However as, seen in the image below a pose taken from different viewpoints can be very different, even when represented in 3D.

In order to compare poses from different view points we remove any rotation from the original pose and train only on 3D poses that are aligned to each plane.

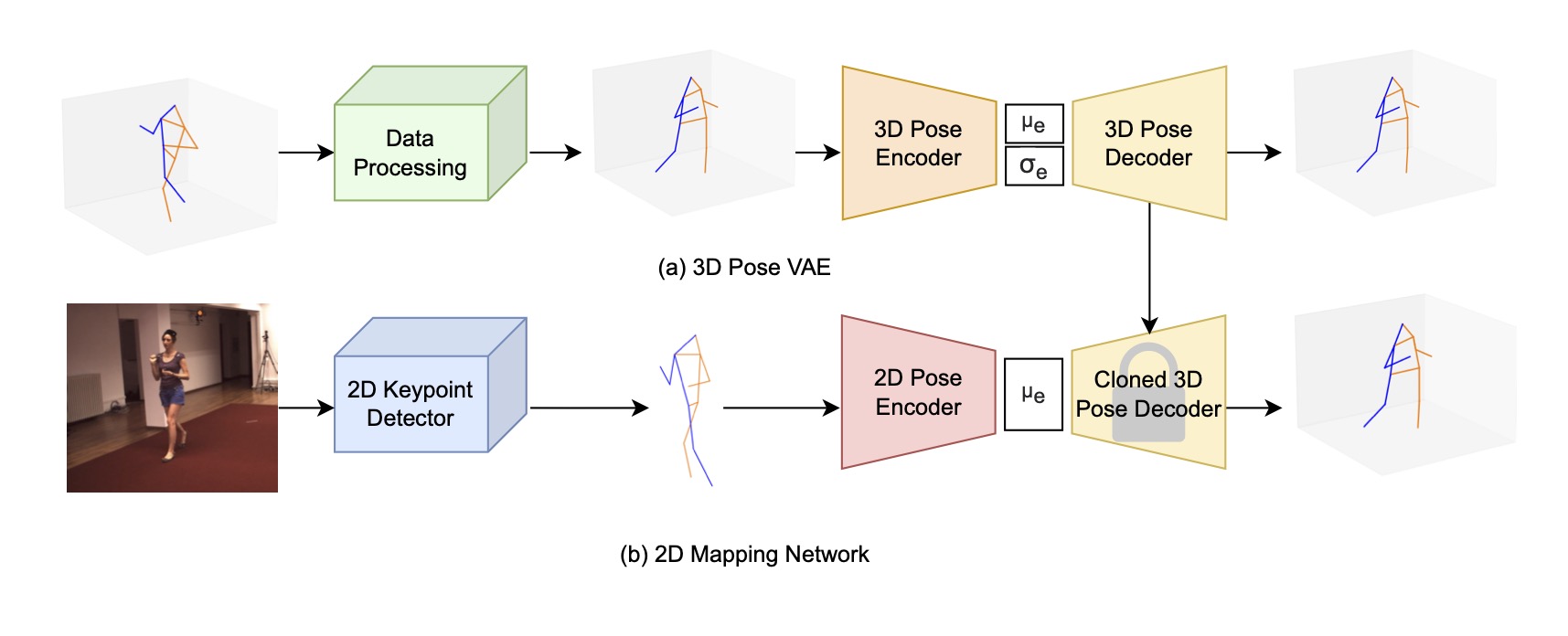

Model Overview

V-Vipe has 3 components. A data processing step in which any rotation information is removed fro the 3D representation as described above. Next a 3D Encoder and Decoder are trained as a Variational Auto Encoder. In addition to the VAE losses a triplet loss is added which pushes similar poses close together in the embedding space. Finally a 2D Encoder is trained to map 2D poses to the embedding space defined above.

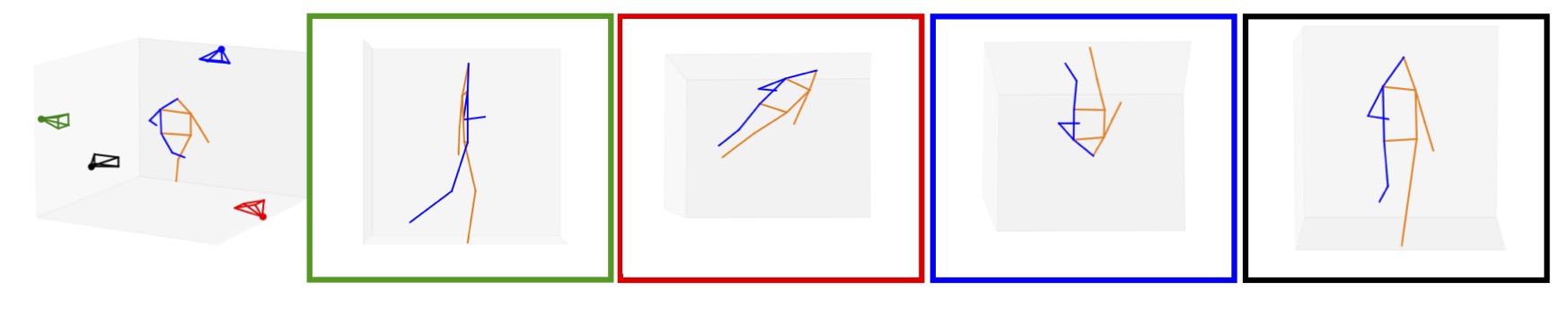

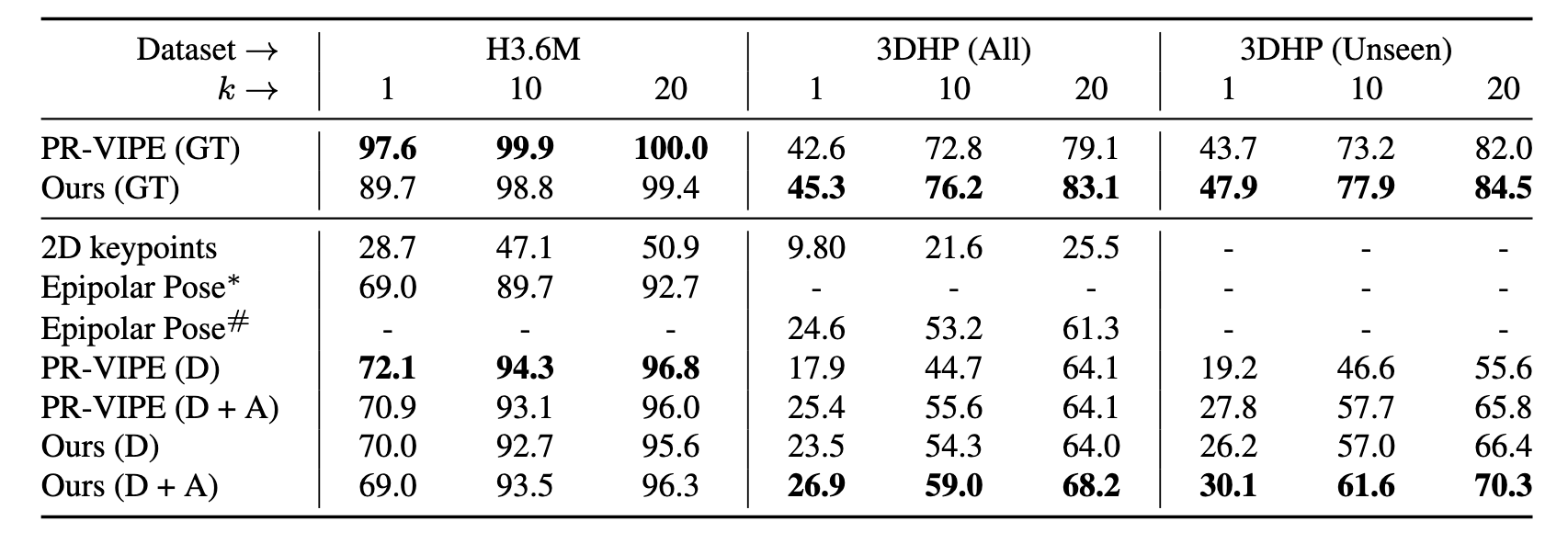

Pose Retrieval

Using the 2D Embedder described above we can find the embeddings for many 2D poses from different camera view points and query any pose to find the most similar pose from another view point. Following prior work we evaluate how often our model finds a similar pose by looking at the hit metric. Because our model has generative capabilities we find that it generalizes better to unseen poses as well as unseen camera viewpoints.

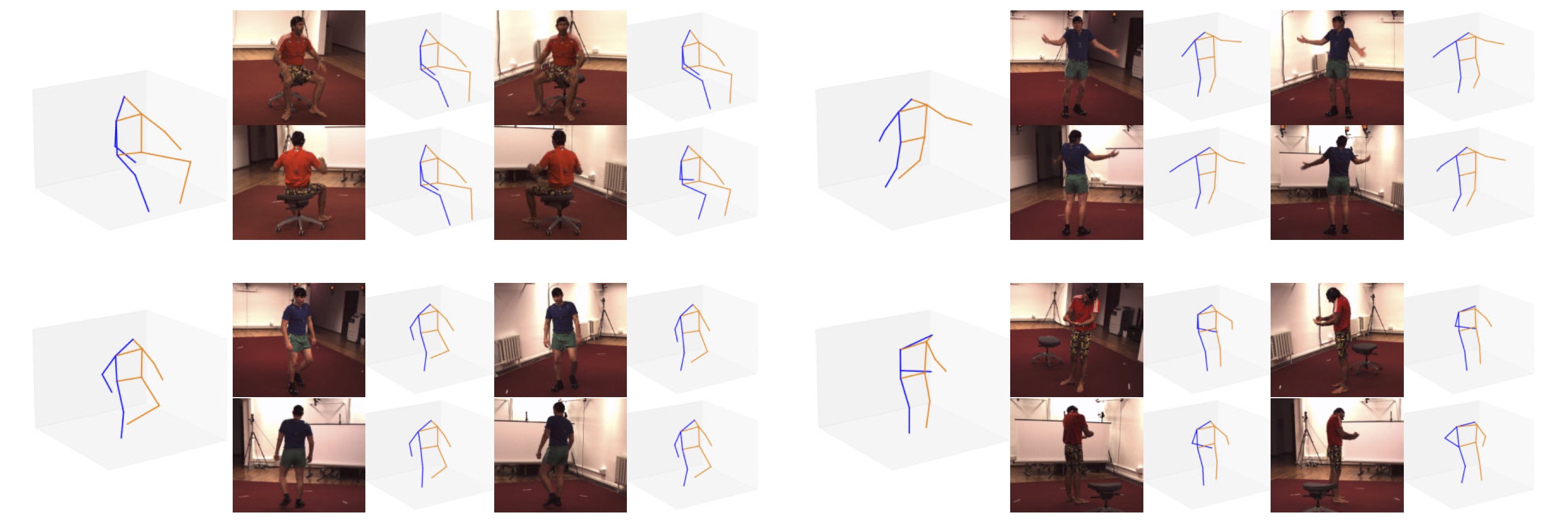

Pose Estimation

In addition to being able to retrieve similar poses our method can estimate new poses.

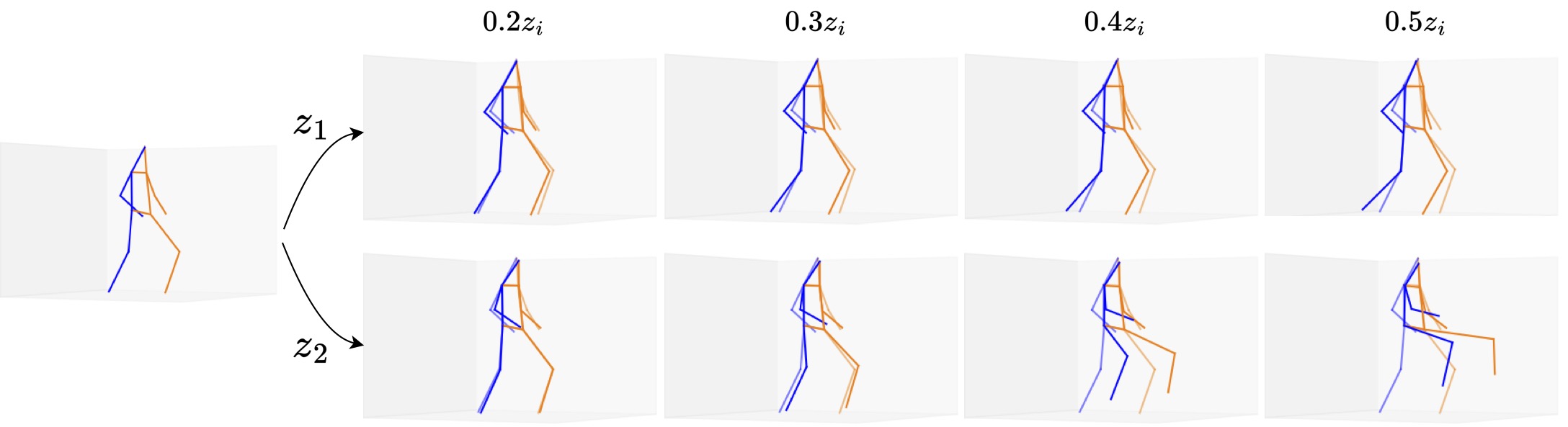

Pose Generation

We can add noise to the embedding space to find new poses

Additionally, we can take two poses and generate poses in between.